For decades, AI has run on silicon–a given that few have questioned or tried to challenge. However, one startup believes the future of computing might be grown in a dish and not manufactured in a lab. And if they’re correct, their technology may hold the answer to how we scale AI technology sustainably–without constantly running the risk of decimating the global power grid.

Last month, Biological Black Box (BBB), a Baltimore-born startup, emerged from stealth with a bold announcement: its Bionode platform—a computing system that integrates lab-grown neurons with traditional processors—is already powering AI tasks like computer vision and large language model (LLM) acceleration. The company aims to remove our long-standing, global dependence on silicon by offering a more adaptive, energy-efficient alternative to the GPU-dominated status quo.

“The biological network has evolved over hundreds of millions of years into the most efficient computing system ever created,” said Alex Ksendzovsky, BBB’s co-founder and CEO. “Now we can start to use it for artificial intelligence.”

Table of Contents

ToggleA Living Co-Processor for AI

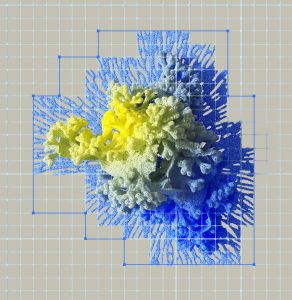

BBB’s platform uses neurons grown from human stem cells and rat-derived tissue, cultivated in a lab and placed atop a microelectrode array with 4,096 contact points. “We have multiple models that we use,” said Ksendzovsky. “One of those models is from rat cells. One of those models is from actual human stem cells converted into neurons.”

Each array contains “hundreds of thousands of them,” he said—neurons that can live for over a year and continuously rewire themselves in response to inputs. BBB believes this self-organizing behavior could radically improve AI training and inference.

“We’ve built a closed-loop system that allows neurons to rewire themselves, increasing efficiency and accuracy for AI tasks,” Ksendzovsky said.

And for those wary of the idea of using live cells, BBB’s current chip model doesn’t require a full-scale brain. “We don’t need millions of neurons to process the entire environment like a brain does,” Ksendzovsky said. “We use only what’s necessary for specific tasks, keeping ethical considerations in mind.”

Applications in Vision and Language

The company isn’t just theorizing. Its Bionode chips have already been deployed in two foundational AI domains: vision and language.

“We’re already applying biological computing to computer vision,” said Ksendzovsky. “We can encode images into a biological network, let neurons process them, and then decode the neural response to improve classification accuracy.”

In addition to computer vision, BBB is also using its system to accelerate large language model (LLM) training—an area notorious for its high computing and energy demands. “One of our biggest breakthroughs is using biological networks to train LLMs more efficiently, reducing the massive energy consumption required today,” he said.

Strategizing Sustainability with Nvidia

While BBB’s ambitions may seem completely disruptive at first glance, the company is positioning itself as a complement—not a competitor—to current AI hardware leaders. It is currently a member of Nvidia’s Inception incubator, which adds credibility and nuance to the company’s strategic vision.

“We don’t see ourselves as direct competitors to Nvidia, at least not in the near future,” Ksendzovsky noted. “Biological computing and silicon computing will coexist. We still need GPUs and CPUs to process the data coming from neurons.”

He added, “We can use our biological networks to augment and improve silicon-based AI models, making them more accurate and more energy-efficient.”

Moving Toward a Modular AI Future

Ksendzovsky believes that as AI workloads diversify, the hardware will need to become more modular—and biology will be part of that toolkit.

“The future of computing will be a modular ecosystem where traditional silicon, biological computing, and quantum computing each play a role based on their strengths,” he said.

BBB is focused on carving out a unique niche in the AI hardware landscape. Although its systems aren’t intended to replace GPUs entirely today, they offer a new class of computing that addresses some of the most urgent bottlenecks in AI: energy usage, retraining costs, and efficient, real-time learning.

To build a future where AI can harmoniously exist alongside the natural planet, it may not come down to how we build chips but how we grow them.

Featured Image Credit: Photo by Athena Sandrini; Pexels